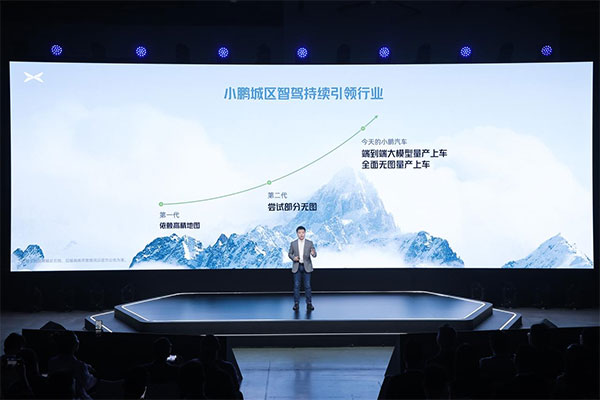

XPENG Motors (“XPENG” or the “Company,” NYSE: XPEV and HKEX: 9868), a leading Chinese smart electric vehicle (“Smart EV”) company, today showcased its cutting-edge advancements in AI technology and announced the full rollout of the XOS 5.1.0, Tianji, in-car OS to all eligible XPENG models during the XPENG AI DAY event themed "Pioneering a new era of smart AI driving."

At the press conference, XPENG Chairman and CEO He Xiaopeng also announced major news: "The first model of the MONA series will debut in June this year," and clearly outlined XPENG's new market positioning as the global pioneer and promoter of AI smart driving.

At the press conference, XPENG Chairman and CEO He Xiaopeng also announced major news: "The first model of the MONA series will debut in June this year," and clearly outlined XPENG's new market positioning as the global pioneer and promoter of AI smart driving.

XPENG has introduced China’s first mass-produced end-to-end model for smart driving: The neural network XNet, the planning and control large model XPlanner, and the large language model XBrain. This integrated system enhances intelligent driving capabilities by two times.

XPENG has introduced China’s first mass-produced end-to-end model for smart driving: The neural network XNet, the planning and control large model XPlanner, and the large language model XBrain. This integrated system enhances intelligent driving capabilities by two times.

The XNet deep visual neural network combine s dynamic XNet, static XNet, and the industry's first mass-produced pure vision 2K occupancy network. This system empowers the autonomous driving system with capabilities comparable to LiDARs, using pure vision and creating a 3D naked-eye experience. The 2K pure vision network reconstructs the world with over 2 million grids, providing 3D representations of drivable spaces and clearly recognizing every detail of static obstacles. Its perception range is doubled, covering an area equivalent to 1.8 football fields, and can accurately identify over 50 types of objects to see further and clearer.

Moreover, XPENG has introduced the neural network-based planning model, XPlanner. XPlanner, like a human cerebellum, continually trains with vast amounts of data, making driving strategies increasingly human-like. This reduces driving jerks by 50%, improper stops by 40%, and passive human takeovers by 60%, providing an "experienced driver"-like smart driving experience that significantly enhances user comfort and safety.

Moreover, XPENG has introduced the neural network-based planning model, XPlanner. XPlanner, like a human cerebellum, continually trains with vast amounts of data, making driving strategies increasingly human-like. This reduces driving jerks by 50%, improper stops by 40%, and passive human takeovers by 60%, providing an "experienced driver"-like smart driving experience that significantly enhances user comfort and safety.

With the introduction of the AI large language model architecture, XBrain, XPENG's autonomous driving system gains human-like learning and comprehension capabilities, significantly improving its handling of complex and even unknown scenarios as well as its reasoning and understanding of real-world logics. For example, with the support of XBrain, the autonomous driving system can recognize turn zones, tidal lanes, special lanes, and road sign text, as well as various behavioral commands such as stop and go, fast and slow. This enables it to make human-like driving decisions that balance safety and performance.

Based on over 1 billion kilometers of video training, more than 6.46 million cumulative kilometers of real-world testing, and over 216 million cumulative kilometers of simulation testing, XPENG's end-to-end large model can iterate every two days. In the next 18 months, smart driving capabilities are expected to improve by 30 times. By the third quarter of this year, XPENG aims to extend XNGP coverage to all roads in China, offering an urban smart driving experience that will rival its highly acclaimed highway smart driving by 2025.

Additionally, He Xiaopeng mentioned at the press conference: "By 2025, XPENG will achieve L4-level autonomous driving experience in China," and that XPENG is currently testing the end-to-end capabilities of XNGP globally, hastening the arrival of fully autonomous driving.